Introduction

In the past releases, Network Director has greatly eased the complexity in provisioning and managing different topologies such as “Virtual Chassis Fabric,” “Layer 3 Fabric,” and so on. Network Director 3.0 supports provisioning and managing of Junos Fusion systems. The objective of this article is to illustrate how Network Director automates the deployment of multihome Junos Fusion by automating several aspects that are required in setting up Junos Fusion.

Multihome Junos Fusion

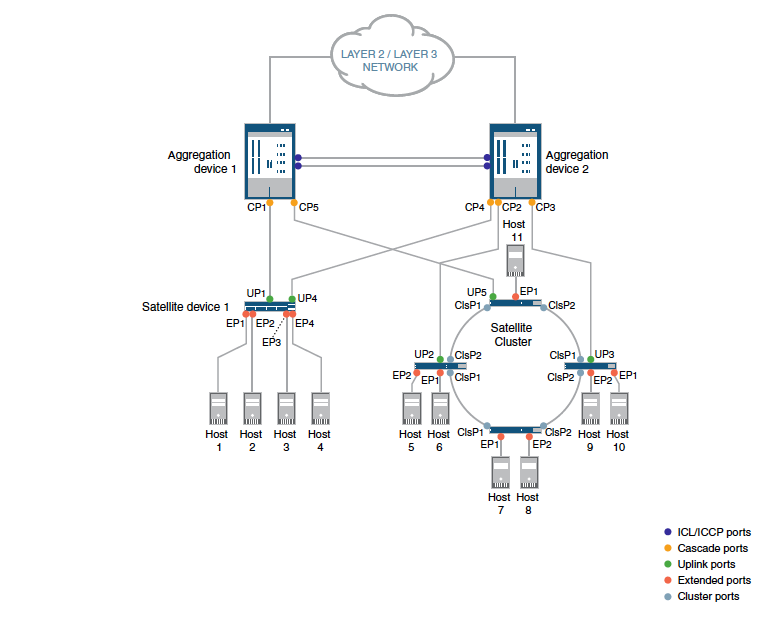

A multihome Junos Fusion topology has two aggregation devices and the satellites (standalone and cluster) have redundant links connecting to each of the aggregation devices for high availability. In Network Director 3.0, all variants or SKUs of EX92XX are supported as an aggregation device and various flavors of EX4300 are supported as a satellite device.

Figure 1: Junos Fusion Enterprise Multihome Topology

Templates

In large campus and data center environments, it is common to have multiple instances of a similar topology (Virtual Chassis Fabric, Junos Fusion, Layer 3 Fabric, and so on). Not only is it cumbersome to create an instance one at a time but also prone to errors and difficult to manage. The common settings that are applicable to several instances can be abstracted to a template and applied to bring up one or many instances. The template does not have any configuration that is instance specific. The user must provide instance-specific configuration attributes at the time of applying or assigning a template. The user can create as many templates as required for different topologies. After the template is created, the template should appear on the template landing page. The user can execute template-related operations from the template landing page.

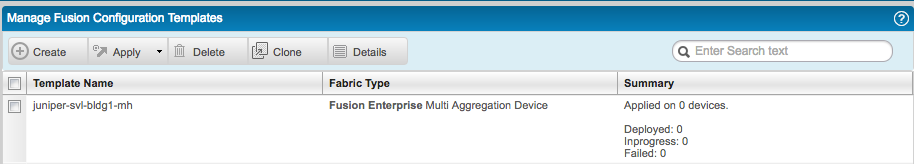

A global task, “Network Builder,” is available in Build mode in Network Director 3.0. Under this task is another subtask, “Manage Templates,” to manage templates. On selecting this subtask (“Manage Templates”), the user is taken to the landing page that lists all the templates that are created using Network Director. The user can create a template, clone a template to another, view the details of a template, and delete the template.

Note: The user cannot edit a template once it is created.

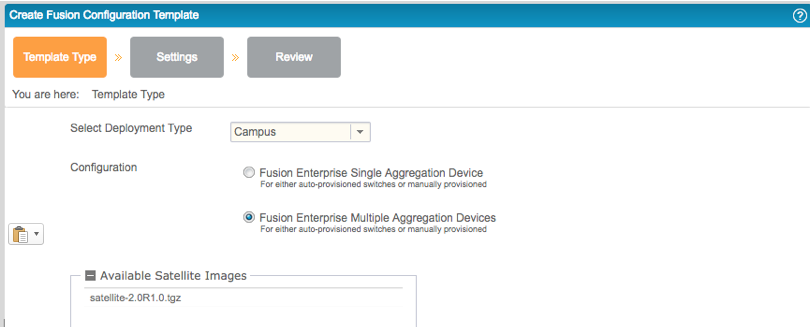

Create Junos Fusion Template – Multihome with a satellite cluster

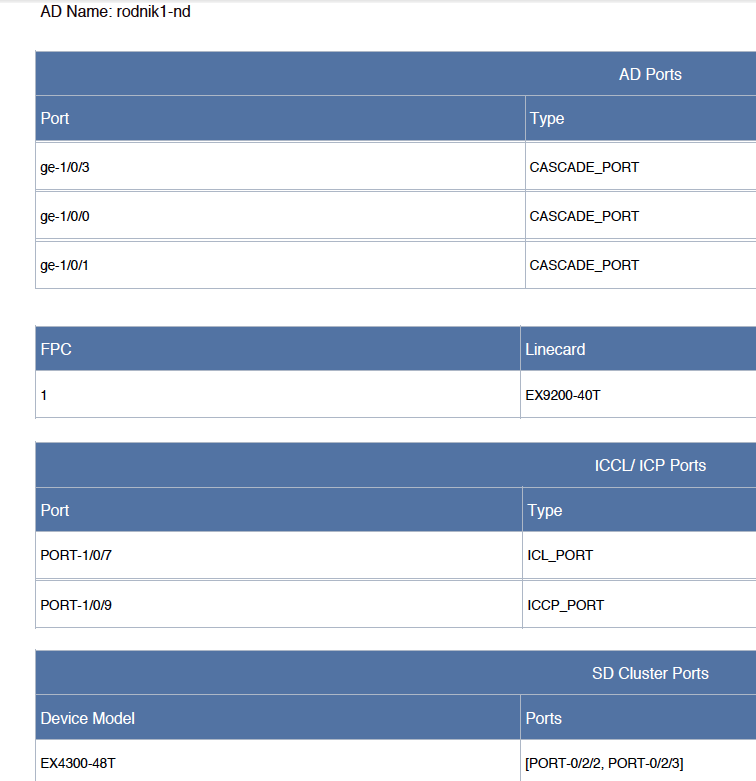

The user can initiate the workflow to create a template from the template landing page. The workflow prompts for user input based on the topology (single-home or dual-home) selected in the Create Template wizard. In the wizard, to begin with, the user builds the chassis of the aggregation device by mapping the line card to the respective slot as found in the physical device. The user provides cascade ports (that is, ports for connecting the satellite devices) on the aggregation device and in case of dual-home topology, the user is prompted to provide ports (ICL and ICCP) that connect both the aggregation devices. If the topology has a cluster of satellite devices, the user also provides the ports on the satellite device models that will be connected to form a ring topology. These are termed cluster ports. Finally, the user selects the default SNOS image that will be loaded on the satellite device model when connected to the aggregation device. The template once created appears on the template landing page and can be applied to bring up one or many instances of Junos Fusion.

Note: Before you create a template. the SNOS image must be uploaded to the Network Director image repository and the Junos Fusion schema must be uploaded to Junos Space.

In Network Director, the task to upload an image to the image repository is available on Image Management > Manage Image Repository in Deploy mode.

Figure 2: Create Fusion Configuration Template - Template Type

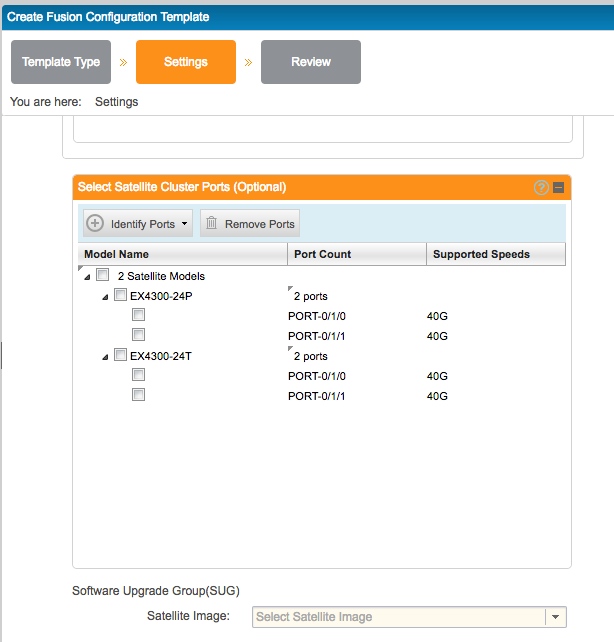

If the topology is likely to have a cluster of satellite devices, then it is required that the user provides the port (on the satellite device models) that will be used to connect the cluster. Unlike standalone satellites, which are required to be directly connected to the cascade port on the aggregation device, for a cluster, the members that form the cluster are connected in a ring and at least two members from the cluster are directly connected to the cascade port on the aggregation device. The cluster port is the port that connects the members to form a ring topology. Network Director 3.0 supports EX43XX models as satellite devices and it is required that ports on PIC 1 and PIC 2 be used as cluster ports. Based on the selected satellite device model, Network Director assists the user in making the cluster port selection by filtering only the supported ports that can be selected as cluster ports. The ports highlighted in orange in Figure 1 indicate cluster ports.

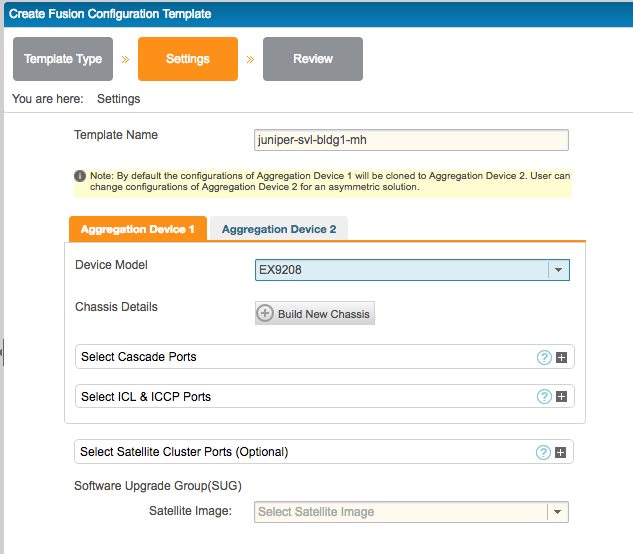

Figure 3: Create Fusion Configuration Template - Aggregation Device Selection

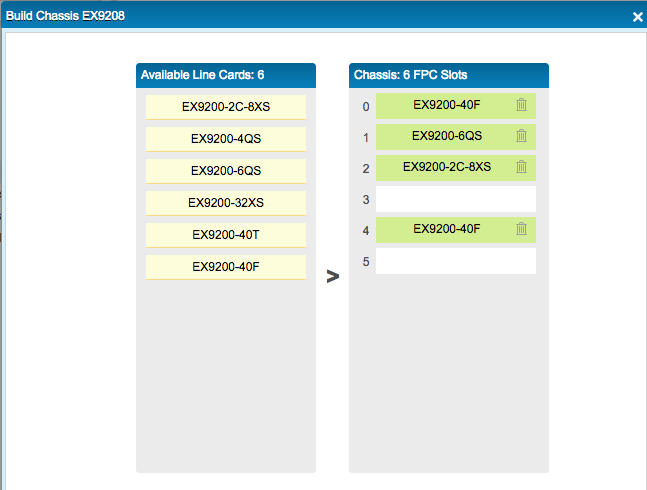

Figure 4: Build Chassis for Aggregation Device

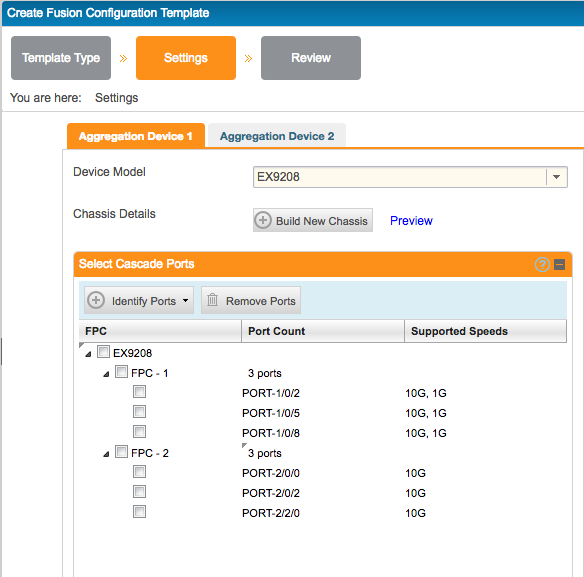

The next step in creating a template is to define the aggregation device model and mapping the line card models to the respective slots as found in the physical device. In a multihome topology, it is very common to have a different model or SKU for the aggregation device or have the line card mapping differently between the two aggregation devices (that is, both the aggregation devices need not be symmetric). By default, Network Director assumes both the aggregation devices are symmetric (that is, having the same devce model and the same line card model mapped to the same slot between the two aggregation devices). If this is not the case, the user can provide the model and the line card mapping for aggregation device 2 by switching to the Aggregation Device 2 tab and selecting the Edit" option in the workflow.

Network Director helps the user select the cascade port based on the line card-to-slot mapping provided earlier.

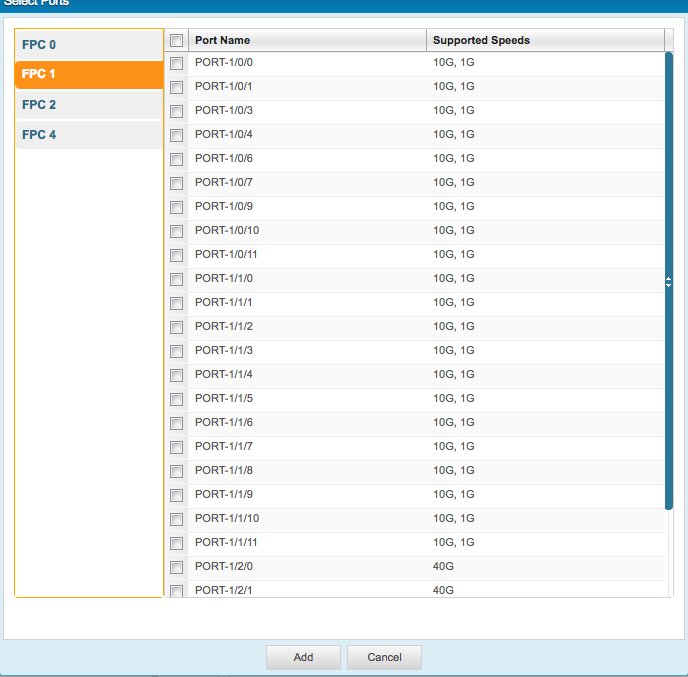

Figure 5: Port Selection User Interface

As can be seen from the Figure 5, based on the line card mapping to the respective slots, Network Director lists the slots selected on the left pane with the ports (and the speed) corresponding to the line card model that is mapped to the slot.

Figure 6: Cascade port selection

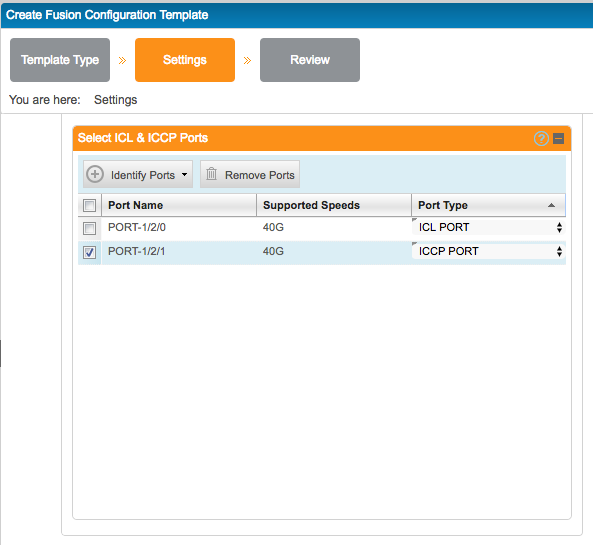

In a multihome topology, both the aggregation devices are connected to carry ICL and ICCP traffic (shown in blue in Figure 1). The user must select one port for ICL and one port for the ICCP connection.

Figure 7: ICL/ICCP Port selection

If there are clusters in the multihome topology, the user must select the cluster ports for every satellite device model in the cluster (that is, the ports that interconnect the satellite devices that are part of the same cluster).

Figure 8: Cluster Ports Selection

The last input in the template creation is the satellite image (SNOS) that that satellite device needs to run by default when it joins the Junos Fusion Enterprise system. When the user clicks Finish in the wizard, the template is saved in the Network Director database and the template is ready to be applied to one or multiple devices that will play the role of the aggregation device in Junos Fusion Enterprise. Once the template is successfully saved, it is listed on the landing page.

Figure 9: Manage Fusion Configuration Templates Landing page

Apply Junos Fusion Template - Multihome

To apply a template, the user must select the template from the template landing page and invoke the Apply button.

Network Director 3.0 supports two use cases in setting up Junos Fusion:

- Junos Fusion setup with devices running factory default configuration

- Junos Fusion setup by converting existing devices that are connected

The scope of this article is to walk through the Junos Fusion setup with devices running factory default configuration. The other use case of converting an existing setup to Junos Fusion is covered in a different article.

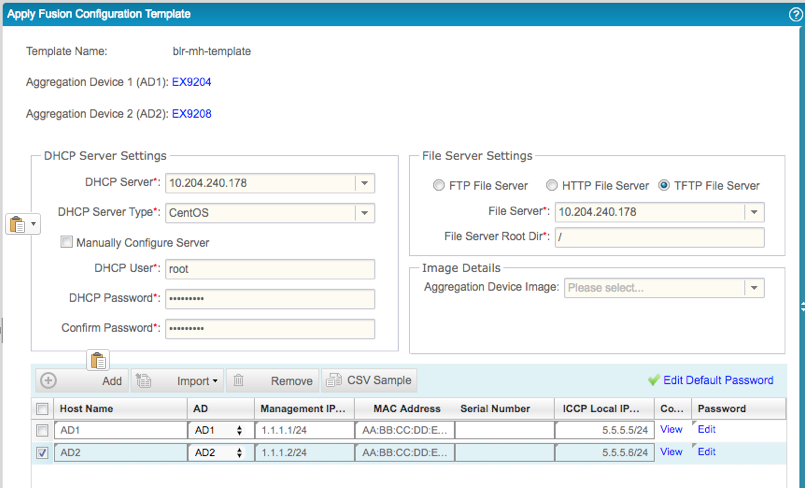

Junos Fusion setup with devices running factory default configuration

This use case addresses the customers' need to bring up Junos Fusion with devices (that is, aggregation and satellite devices) that are not managed by Network Director and the devices running factory default configuration. When the Apply > Unmanaged Devices action from the template landing page is invoked, the user is prompted to provide the DHCP server and file server details as the devices go through Zero Touch Provisioning once brought into the network. The only other information that the user needs to provide is the serial number or MAC address of the aggregation device and the ICCP local IP address for the aggregation devices. The user can provide multiple sets or pairs of aggregation devices to which the multihome template must be applied. However, the user must manually map the pair of aggregation devices. Network Director identifies the pair based on the connection. Network Director also automates the configuration related to redundancy groups and the satellite devices as devices are connected.

Figure 10: Apply Junos Fusion Template - Multihome

#cascade port interfaces, if the interface supports multiple speeds configuration will be set for each supported speed.

interfaces {

ge-1/0/2 {

cascade-port;

}

}

interfaces {

xe-1/0/2 {

cascade-port;

}

}

interfaces {

ge-1/0/5 {

cascade-port;

}

}

interfaces {

xe-1/0/5 {

cascade-port;

}

}

interfaces {

ge-1/0/8 {

cascade-port;

}

}

interfaces {

xe-1/0/8 {

cascade-port;

}

}

interfaces {

xe-2/0/0 {

cascade-port;

}

}

interfaces {

xe-2/0/2 {

cascade-port;

}

}

interfaces {

xe-2/2/0 {

cascade-port;

}

}

#configuration to enable auto satellite conversion

chassis {

satellite-management {

auto-satellite-conversion {

satellite all;

}

}

}

#configuration to bind all satellites to a software upgrade group

#required for auto satellite conversion

chassis {

satellite-management {

upgrade-groups {

default-sug {

satellite all;

}

}

}

}

#ICCP interface configuration

interfaces {

et-1/2/1 {

unit 0 {

family inet {

address 5.5.5.5/24;

}

}

}

}

#ICL interface configuration

interfaces {

et-1/2/0 {

unit 0 {

family ethernet-switching {

interface-mode trunk;

vlan {

members all;

}

}

}

}

}

#configuration required for multi-chassis to work

switch-options {

service-id 2;

}

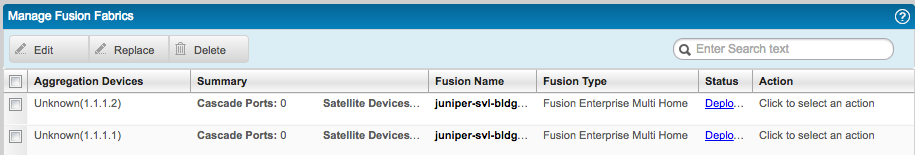

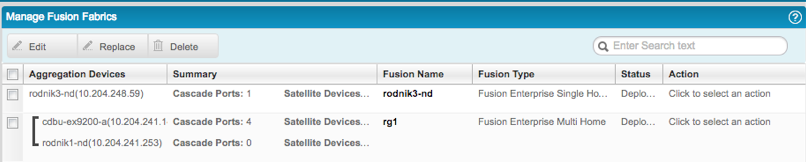

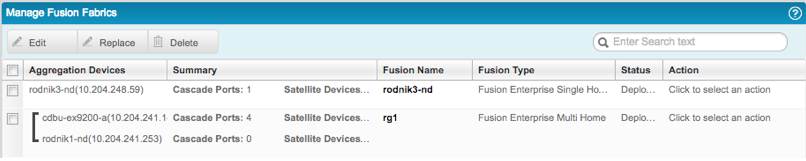

Once the template is applied successfully, the template landing page should show the count of instances the template is applied. The Manage Fusion Fabrics landing page lists the aggregation devices as separate entities until the pairing of aggregation devices happens (as shown in Figure 11).

Figure 11: Manage Fusion Fabrics listing unpaired aggregation devices

Discovery or Management of Aggregation Devices in Network Director

When the aggregation device with the Junos factory default configuration is brought into the network, the device goes through zero touch provisioning (ZTP) and is autodiscovered and managed in Network Director. The device retrieves the IP address from the DHCP server and the Network Director-generated configuration from the file server. Network Director is notified about the device coming online (as Network Director is configured as a trap recipient) and the aggregation device is discovered and automanaged in Network Director. If the IP address of the discovered device is allocated for the Junos Fusion aggregation device, Network Director checks whether there is a template assigned for the discovered aggregation device. If a template is found, Network Director obtains the default SNOS image from the template and pushes the SNOS image to the discovered aggregation device. Network Director prepares the aggregation device by pushing the SNOS image to the aggregation device. Network Director also executes the RPC to map the SNOS image for the default SUG. The SNOS image is required for autosatellite conversion.

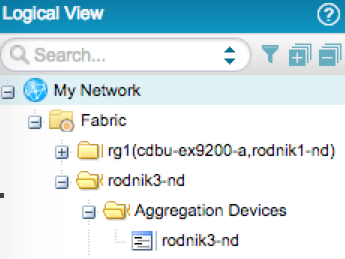

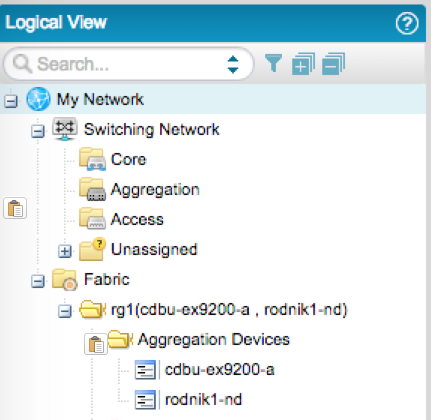

The logical tree view groups the aggregation devices with the redundancy group name as the container node in the tree. As we can see from the staged configuration, there is no configuration related to the redundancy group. Therefore, Network Director would not be able to show the devices as paired. Instead, it represents both the aggregation devices as separate entities, as in a single-home topology (see Figure 12), until the pairing happens.

Figure 12: Logical Tree View Representation of Aggregation Devices After Device Discovery

Cabling Plan

After applying the template, the next step is to make the connections according to the cabling plan. A fully loaded Junos Fusion Enterprise can support up to 128 satellite devices and it is not going to be easy to make the connections without errors. Network Director helps the user by providing a cabling plan report in PDF format, which can be downloaded and used as reference to make the connections between the aggregation devices and the satellite devices.

Following is a sample cabling plan showing cascade ports, ICL and ICCP ports, and cluster ports.

Multihome Pairing Automation

As we can see from the generated configuration, in case of the multihome topology, there is no configuration related to redundancy groups. Also, when the multihome template is applied to aggregation devices, the user provides only the serial number or MAC address and not map the aggregation devices that need to be paired. Network Director identifies the devices as multihome only if the redundancy group configuration exists on the aggregation device. Therefore, when the aggregation devices are discovered in Network Director, they are shown as single home on the Manage Fusion landing page. It is required that the user make the connections between the dual aggregation devices (ICL and ICCP ports) for the multihome pairing to happen first before connecting the satellite-capable devices to the cascade ports. When the user connects the ICL and ICCP ports between the aggregation devices, Network Director is notified about the link coming up and checks whether the connection is on an ICL/ICCP port with that of the applied template. Network Director creates two separate asynchronous jobs for each of the aggregation devices, which in turn provision the redundancy group-related configuration for each of the devices. Network Director also automates the setting of chassis-id, peer-chassis-id, and system-mac address for both the aggregation devices. After the redundancy group configuration is successfully pushed, the devices are deleted and rediscovered automatically by Network Director. After rediscovery and a successful brownfield process, the devices are shown as multihome both in the perspective tree view and the Fusion landing page.

Configuration pushed to aggregation device 1:

set chassis satellite-management redundancy-groups chassis-id 2

set chassis satellite-management redundancy-groups rg-group rg1

set chassis satellite-management redundancy-groups rg-group rg1 peer-chassis-id 1

set chassis satellite-management redundancy-groups rg-group rg1 peer-chassis-id 1 inter-chassis-link ge-1/0/5

set chassis satellite-management redundancy-groups rg-group rg1 satellite all

set chassis satellite-management redundancy-groups rg-group rg1 redundancy-group-id 1

set chassis satellite-management redundancy-groups rg-group rg1 system-mac-address 00:a0:a5:89:0a:ec

set protocols iccp peer 30.30.30.2

set protocols iccp peer 30.30.30.2 redundancy-group-id-list 1

set protocols iccp peer 30.30.30.2 liveness-detection detection-time threshold 100

set protocols iccp peer 30.30.30.2 liveness-detection minimum-receive-interval 10

set protocols iccp peer 30.30.30.2 liveness-detection transmit-interval minimum-interval 10

set protocols iccp local-ip-addr 30.30.30.1

Device output from aggregation device 1:

root@AD_18# run show iccp

Redundancy Group Information for peer 30.30.30.2

TCP Connection : Established

Liveliness Detection : Up

Redundancy Group ID Status

1 Up

Client Application: l2ald_iccpd_client

Redundancy Group IDs Joined: 1

root@AD_18# show interfaces | display set

set interfaces ge-1/0/1 cascade-port

set interfaces ge-1/0/5 unit 0 family ethernet-switching interface-mode trunk

set interfaces ge-1/0/5 unit 0 family ethernet-switching vlan members all

set interfaces ge-1/0/7 unit 0 family inet address 30.30.30.1/24

root@AD_18# show chassis | display set

set chassis satellite-management fpc 100 alias sd100

set chassis satellite-management fpc 100 cascade-ports ge-1/0/1

set chassis satellite-management redundancy-groups chassis-id 2

set chassis satellite-management redundancy-groups rg1 redundancy-group-id 1

set chassis satellite-management redundancy-groups rg1 peer-chassis-id 1 inter-chassis-link ge-1/0/5

set chassis satellite-management redundancy-groups rg1 satellite all

set chassis satellite-management redundancy-groups rg1 system-mac-address 00:a0:a5:89:0a:ec

set chassis satellite-management upgrade-groups default-sug satellite all

set chassis satellite-management auto-satellite-conversion satellite all

root@AD_18# show protocols | display set

set protocols iccp local-ip-addr 30.30.30.1

set protocols iccp peer 30.30.30.2 redundancy-group-id-list 1

set protocols iccp peer 30.30.30.2 liveness-detection minimum-receive-interval 10

set protocols iccp peer 30.30.30.2 liveness-detection transmit-interval minimum-interval 10

set protocols iccp peer 30.30.30.2 liveness-detection detection-time threshold 100

set protocols lldp interface all

[edit]

root@AD_18# show switch-options | display set

set switch-options service-id 2

Configuration pushed to aggregation device 2:

set chassis satellite-management redundancy-groups chassis-id 1

set chassis satellite-management redundancy-groups rg-group rg1

set chassis satellite-management redundancy-groups rg-group rg1 peer-chassis-id 2

set chassis satellite-management redundancy-groups rg-group rg1 peer-chassis-id 2 inter-chassis-link ge-1/0/5

set chassis satellite-management redundancy-groups rg-group rg1 satellite all

set chassis satellite-management redundancy-groups rg-group rg1 redundancy-group-id 1

set chassis satellite-management redundancy-groups rg-group rg1 system-mac-address 00:a0:a5:89:0a:ec

set protocols iccp peer 30.30.30.1

set protocols iccp peer 30.30.30.1 redundancy-group-id-list 1

set protocols iccp peer 30.30.30.1 liveness-detection detection-time threshold 100

set protocols iccp peer 30.30.30.1 liveness-detection minimum-receive-interval 10

set protocols iccp peer 30.30.30.1 liveness-detection transmit-interval minimum-interval 10

set protocols iccp local-ip-addr 30.30.30.2

Device output from aggregation device 2:

root@AD_84# run show iccp

Redundancy Group Information for peer 30.30.30.1

TCP Connection : Established

Liveliness Detection : Up

Redundancy Group ID Status

1 Up

Client Application: l2ald_iccpd_client

Redundancy Group IDs Joined: 1

root@AD_84# show interfaces | display set

set interfaces ge-1/0/1 cascade-port

set interfaces ge-1/0/5 unit 0 family ethernet-switching interface-mode trunk

set interfaces ge-1/0/5 unit 0 family ethernet-switching vlan members all

set interfaces ge-1/0/7 unit 0 family inet address 30.30.30.2/24

root@AD_84# show chassis | display set

set chassis satellite-management fpc 100 alias sd100

set chassis satellite-management fpc 100 cascade-ports ge-1/0/1

set chassis satellite-management redundancy-groups chassis-id 1

set chassis satellite-management redundancy-groups rg1 redundancy-group-id 1

set chassis satellite-management redundancy-groups rg1 peer-chassis-id 2 inter-chassis-link ge-1/0/5

set chassis satellite-management redundancy-groups rg1 satellite all

set chassis satellite-management redundancy-groups rg1 system-mac-address 00:a0:a5:89:0a:ec

set chassis satellite-management upgrade-groups default-sug satellite all

set chassis satellite-management auto-satellite-conversion satellite all

root@AD_84# show protocols | display set

set protocols iccp local-ip-addr 30.30.30.2

set protocols iccp peer 30.30.30.1 redundancy-group-id-list 1

set protocols iccp peer 30.30.30.1 liveness-detection minimum-receive-interval 10

set protocols iccp peer 30.30.30.1 liveness-detection transmit-interval minimum-interval 10

set protocols iccp peer 30.30.30.1 liveness-detection detection-time threshold 100

root@AD_84# show switch-options | display set

set switch-options service-id 2

Figure 13: Manage Fusion Fabrics Landing Page

Figure 14: Logical View

Note: Network Director automates everything that is required for multihome pairing to be established between two aggregation devices purely by the physical topology.

Autoconversion to satellite device from standalone

The user follows the cabling plan and connects the satellite device running Junos OS or SNOS (in standalone mode) to one of the configured cascade ports (for example, ge-1/0/5) on the aggregation device or devices. This in turn results in a link coming up and notifies Network Director about the link event. Network Director executes a series of RPCs on the aggregation device to determine whether the configuration needs to be pushed for the connection made. If Network Director identifies that the configuration needs to be pushed for a standalone device to satellite device, the following configurations are pushed to the aggregation device. If the satellite is dual homed, then Network Director atomically pushes the required satellite configuration to both the aggregation devices in a single transaction, thus not leaving the device in an inconsistent state.

set chassis satellite-management fpc 65

set chassis satellite-management fpc 65 alias sd65

set chassis satellite-management fpc 65 cascade-ports ge-1/0/5

As can be seen from the above configuration, Network Director assigns FPC slot ID 65 to the connected device and the alias is prefixed with “sd”. Network Director loops through the slot ID range and selects the first available slot ID for the connected device.

The following are required for Junos Space Platform autosatellite conversion to take place:

- SUGs are created and the FPC slot is mapped to one of the SUGs.

- Autosatellite conversion is enabled.

- The SNOS image exists in the aggregation device.

- The SNOS image is mapped to the SUG.

Network Director ensures that the connected device is able to join the Junos Fusion by automating all of the requirements listed above. Network Director automates requirements 1 and 2 by creating a default SUG and assigning all the satellites to the default SUG as part of the configuration staged for the aggregation device serial number or MAC address in the DHCP/file server. Network Director automates requirements 3 and 4 by pushing the SNOS image and mapping the SNOS image to the default SUG during the discovery and management of the aggregation device.

Note: Network Director automates all the above requirements for the connected switch to be converted from standalone to satellite mode.

After the device joins as a satellite member in Junos Fusion, the aggregation device sends a system log about the new satellite member coming online in Junos Fusion. Network Director/Junos Space Platform listens for this system log and updates the physical and logical inventories based on the system log.

Configuring logical/subinterfaces on extended ports

Unlike the default Junos OS configuration where logical interface or subinterface (unit 0) is configured for all the ports, the extended ports by default are not configured with logical interface 0 (unit 0). As a logical interface or subinterface is not configured by default on all the extended ports, LLDP neighbors cannot list any connection on these ports. It is cumbersome to configure logical interfaces or subinterfaces for extended ports given the scale of a fully loaded Junos Fusion. Network Director simplifies this by autoconfiguring logical interface or subinterface (unit 0) on all the extended ports when the system log about the new member joining the Junos Fusion is received. Below is the logical interface or subinterface configuration pushed to the aggregation device. Similarly, Network Director automates the other satellite-capable devices when connected to cascade ports on the aggregation device to be autoconverted to satellite members and join Junos Fusion. If multiple satellites join the Junos Fusion within a stipulated duration (say, two minutes), Network Director configures a logical interface or subinterface on the extended ports for all the FPC slots as a single job.

set interfaces ge-65/0/0 unit 0 family ethernet-switching

set interfaces ge-65/0/1 unit 0 family ethernet-switching

..

..

set interfaces xe-65/2/3 unit 0 family ethernet-switching

Autoconversion from a standalone satellite to a satellite cluster

When the user connects the cluster port (that is, extended port) on a standalone satellite device (for example, FPC 65) running SNOS that is part of Junos Fusion to that of the cluster port on another standalone switch running Junos OS or SNOS, Network Director is notified about the link coming up online. The cluster port on the standalone satellite device is an extended port on the aggregation device; therefore, the connection with the cluster port on another standalone switch is notified to Network Director. Network Director executes a series of RPC commands on the aggregation device and correlates the information from these RPC commands to determine whether the standalone satellite device and the standalone switch connected through the cluster port need to be converted to a satellite cluster. Network Director does this only if the template used to bring up the Junos Fusion has cluster ports configured and the connection matches the cluster port in the template. If the above conditions are met, Network Director generates and pushes the CLIs to convert the standalone satellite device to a cluster and also generates the CLIs to add the standalone satellite switch to be part of the cluster.

AD1:

set chassis satellite-management cluster cluster-1

set chassis satellite-management cluster cluster-1 cluster-id 1

set chassis satellite-management cluster cluster-1 cascade-ports ge-1/0/0

set chassis satellite-management cluster cluster-1 fpc 66

set chassis satellite-management cluster cluster-1 fpc 66 member-id 0

set chassis satellite-management cluster cluster-1 fpc 66 system-id 4c:96:14:ec:20:80

set chassis satellite-management cluster cluster-1 fpc 67

set chassis satellite-management cluster cluster-1 fpc 67 member-id 1

set chassis satellite-management cluster cluster-1 fpc 67 system-id 4c:96:14:e8:d2:e0

set chassis satellite-management cluster cluster-2

set chassis satellite-management cluster cluster-2 cluster-id 2

set chassis satellite-management cluster cluster-2 cascade-ports ge-1/0/1

set chassis satellite-management cluster cluster-2 fpc 65

set chassis satellite-management cluster cluster-2 fpc 65 member-id 0

set chassis satellite-management cluster cluster-2 fpc 65 system-id 4c:96:14:ec:20:20

set chassis satellite-management cluster cluster-2 fpc 68

set chassis satellite-management cluster cluster-2 fpc 68 member-id 1

set chassis satellite-management cluster cluster-2 fpc 68 system-id 4c:96:14:ec:1a:e0

delete chassis satellite-management fpc 65

delete chassis satellite-management fpc 66

set chassis satellite-management redundancy-groups rg-group rg1

set chassis satellite-management redundancy-groups rg-group rg1 cluster cluster-1

set chassis satellite-management redundancy-groups rg-group rg1 cluster cluster-2

set chassis satellite-management upgrade-groups upgrade-group default-sug

delete chassis satellite-management upgrade-groups upgrade-group default-sug satellite all

set chassis satellite-management upgrade-groups upgrade-group default-sug satellite 69-253

AD2:

set chassis satellite-management cluster cluster-1

set chassis satellite-management cluster cluster-1 cluster-id 1

set chassis satellite-management cluster cluster-1 cascade-ports ge-1/0/0

set chassis satellite-management cluster cluster-1 fpc 66

set chassis satellite-management cluster cluster-1 fpc 66 member-id 0

set chassis satellite-management cluster cluster-1 fpc 66 system-id 4c:96:14:ec:20:80

set chassis satellite-management cluster cluster-1 fpc 67

set chassis satellite-management cluster cluster-1 fpc 67 member-id 1

set chassis satellite-management cluster cluster-1 fpc 67 system-id 4c:96:14:e8:d2:e0

set chassis satellite-management cluster cluster-2

set chassis satellite-management cluster cluster-2 cluster-id 2

set chassis satellite-management cluster cluster-2 cascade-ports ge-1/0/1

set chassis satellite-management cluster cluster-2 fpc 65

set chassis satellite-management cluster cluster-2 fpc 65 member-id 0

set chassis satellite-management cluster cluster-2 fpc 65 system-id 4c:96:14:ec:20:20

set chassis satellite-management cluster cluster-2 fpc 68

set chassis satellite-management cluster cluster-2 fpc 68 member-id 1

set chassis satellite-management cluster cluster-2 fpc 68 system-id 4c:96:14:ec:1a:e0

delete chassis satellite-management fpc 65

delete chassis satellite-management fpc 66

set chassis satellite-management redundancy-groups rg-group rg1

set chassis satellite-management redundancy-groups rg-group rg1 cluster cluster-1

set chassis satellite-management redundancy-groups rg-group rg1 cluster cluster-2

set chassis satellite-management upgrade-groups upgrade-group default-sug

delete chassis satellite-management upgrade-groups upgrade-group default-sug satellite all

set chassis satellite-management upgrade-groups upgrade-group default-sug satellite 69-253

As can be seen from the above generated CLIs, Network Director deletes the standalone satellite (65) and adds it as part of the cluster (cluster-1) and also adds the standalone switch (66) to the cluster (cluster-1). Network Director also pushes the configuration to remove the FPCs (65 and 66) that are part of the cluster (cluster-1) from any other SUG if they are present. Network Director completely automates all of the tasks that are required for cluster formation as devices are connected to the aggregation device. If there are redundant connections from the standalone satellite (65) to the aggregation device, all the connections are added as cascade ports to the cluster (cluster-1) configuration.

Managing Single-Home Junos Fusion

After the Junos Fusion is set, it may be required to upsize or downsize the scale of Junos Fusion by adding or deleting cascade ports or replace the aggregation device if required. Network Director eliminates the complexity of these operations from the user by automating several of the subtasks and ensures that these operations are successfully executed on the aggregation device. Once the Junos Fusion is discovered and managed in Network Director, it is listed on the Manage Fusion Fabrics landing page.

Figure 15: Manage Fusion Fabrics

The user must select the Junos Fusion instance from the landing page and invoke the Edit action to start making changes.

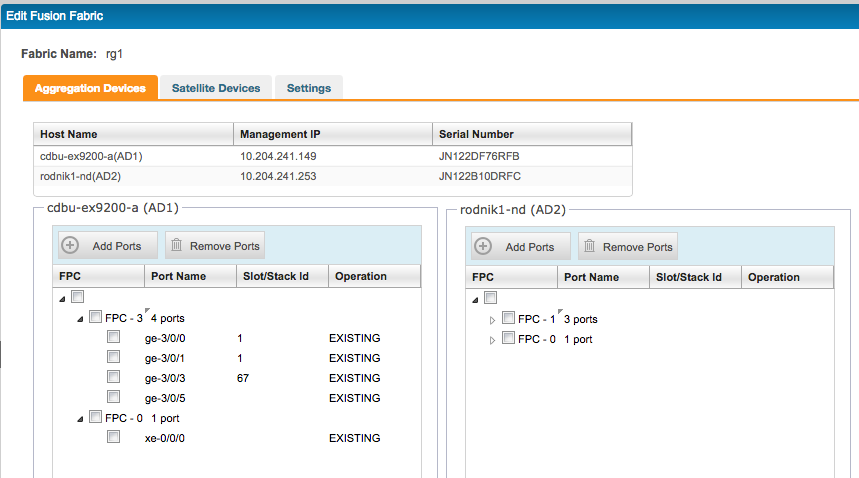

Figure 16: Edit Fusion Fabric

The user can add or remove cascade ports as needed to or from one or both the aggregation devices and the configuration is successfully pushed by Network Director atomically to the devices. If there are any port settings (unit 0) on the port that is selected as the cascade port, Network Director automatically removes those configurations before enabling cascade port setting. If a cascade port that is connected to an online satellite device is removed using the workflow, Network Director automatically brings down the satellite device and deletes the configuration from the aggregation device to avoid any traffic drop. In case of a cluster, it is recommended to remove the physical connection before removing the configuration because removing members may break the ring to split or become linear. Hence, the use case of removing a member from the cluster is not supported by Network Director.

If the multihome Junos Fusion Enterprise is brought using ZTP, the user can replace one aggregation device from Network Director. This action can be performed from the Manage Junos Fusion landing page. The user must provide the MAC address of the replacement aggregation device and Network Director would fetch the ZTP server details from its database and provision the current configuration of the replaced aggregation device to the ZTP servers.

Replacing a satellite device is a plug-and-play activity. As can be seen from the configuration pushed by Network Director to the aggregation device, there is no mapping of the FPC to the hardware MAC address of the satellite device. In order to replace a satellite device, the user must unplug the device that needs to be taken down for maintenance and connect the replacement device.

Summary

This article demonstrates how Network Director automates the deployment of multihome Junos Fusion Enterprise with hybrid (standalone and cluster) satellites by automating various aspects that are required in deploying the Junos Fusion.

Network Director:

- Helps the user create a template and apply the template to bring up one or many instances of JunosFusion

- Preprovisions the aggregation device for zero touch provisioning (ZTP)

- Provides a cabling plan to the user to make the connections between the aggregation and satellite devices

- Automates the pairing between the aggregation devices that are multihomed to the connected satellites

- Automates the satellite device configuration by pushing the configuration as satellite-capable devices are connected to the configured cascade ports on the aggregation device

- Automates the cluster solution as devices are connected

- Helps to troubleshoot and fix connection errors by running a connectivity report to diagnose problems

- Helps in managing Junos Fusion post deployment

- Provisions the configuration atomically to both the aggregation devices so that the system is never in an in-consistent state.